|

UESTC(2018.09-2022.07)

|

|

|

I'm interested in the direction of model quantization, and I believe that model quantization is one of the current trends in AI. |

|

You can find the full list on Google Scholar. |

|

In this paper, we present BiFSMN, an accurate and extreme-efficient binary network for KWS, outperforming existing methods on various KWS datasets and achieving impressive 22.3x speedup and 15.5x storage-saving on edge hardware. |

|

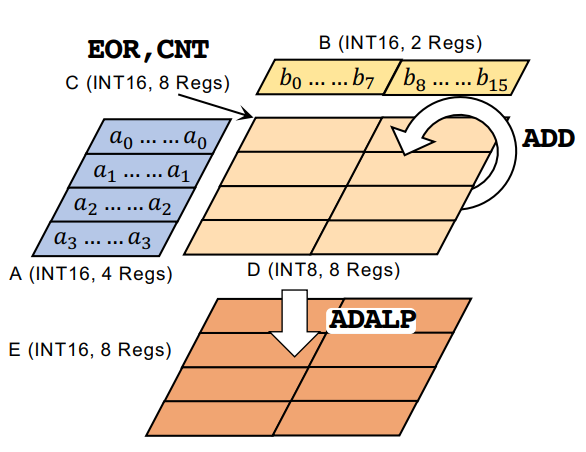

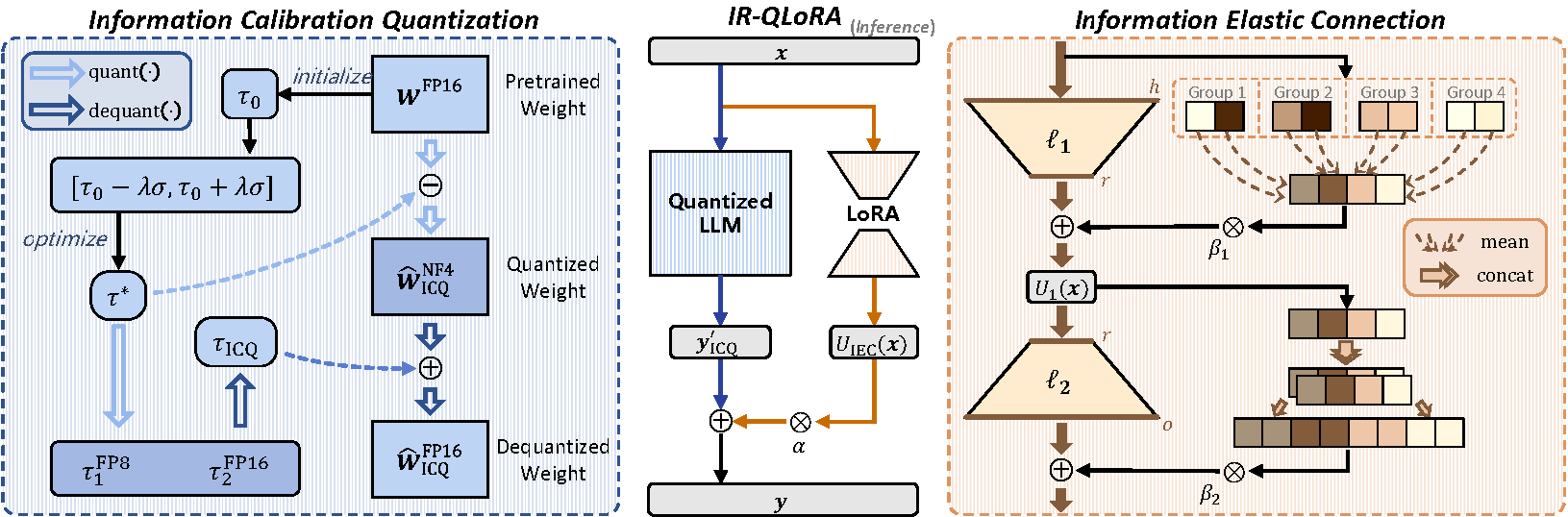

In this paper, we present a novel IR-QLoRA for pushing quantized LLMs with LoRA to be highly accurate through information retention. The proposed IR-QLoRA mainly relies on two technologies derived from the perspective of unified information: (1) statistics-based Information Calibration Quantization allows the quantized parameters of LLM to retain original information accurately; (2) finetuning-based Information Elastic Connection makes LoRA utilizes elastic representation transformation with diverse information. |